Chronos User Guide¶

1 Overview¶

Chronos is an application framework for building large-scale time series analysis applications.

You can use Chronos to do:

Data pre/post-processing and feature generation (using TSDataset)

Time Series Forecasting (using Standalone Forecasters, Auto Models (with HPO) or AutoTS (full AutoML enabled pipelines))

Anomaly Detection (using Anomaly Detectors)

Synthetic Data Generation (using Simulators)

2 Install¶

Install analytics-zoo with target [automl] to install the additional dependencies for Chronos.

conda create -n my_env python=3.7

conda activate my_env

pip install --pre --upgrade analytics-zoo[automl]

3 Initialization¶

Chronos uses Orca to enable distributed training and AutoML capabilities. Init orca as below when you want to:

Use the distributed mode of a standalone forecaster.

Use automl to distributedly tuning your model.

View Orca Context for more details. Note that argument init_ray_on_spark must be True for Chronos.

if args.cluster_mode == "local":

init_orca_context(cluster_mode="local", cores=4, init_ray_on_spark=True) # run in local mode

elif args.cluster_mode == "k8s":

init_orca_context(cluster_mode="k8s", num_nodes=2, cores=2, init_ray_on_spark=True) # run on K8s cluster

elif args.cluster_mode == "yarn":

init_orca_context(cluster_mode="yarn-client", num_nodes=2, cores=2, init_ray_on_spark=True) # run on Hadoop YARN cluster

View Quick Start for a more detailed example.

4 Data Processing and Feature Engineering¶

Time series data is a special data formulation with its specific operations. Chronos provides TSDataset as a time series dataset abstract for data processing (e.g. impute, deduplicate, resample, scale/unscale, roll sampling) and auto feature engineering (e.g. datetime feature, aggregation feature). Cascade call is supported for most of the methods. TSDataset can be initialized from a pandas dataframe and be directly used in AutoTSEstimator. It can also be converted to a pandas dataframe or numpy ndarray for Forecasters and Anomaly Detectors.

TSDataset is designed for general time series processing while providing many specific operations for the convenience of different tasks(e.g. forecasting, anomaly detection).

4.1 Basic concepts¶

A time series can be interpreted as a sequence of real value whose order is timestamp. While a time series dataset can be a combination of one or a huge amount of time series. It may contain multiple time series since users may collect different time series in the same/different period of time (e.g. An AIops dataset may have CPU usage ratio and memory usage ratio data for two servers at a period of time. This dataset contains four time series).

In TSDataset, we provide 2 possible dimensions to construct a high dimension time series dataset (i.e. feature dimension and id dimension).

feature dimension: Time series along this dimension might be independent or related. Though they may be related, they are assumed to have different patterns and distributions and collected on the same period of time. For example, the CPU usage ratio and Memory usage ratio for the same server at a period of time.

id dimension: Time series along this dimension are assumed to have the same patterns and distributions and might by collected on the same or different period of time. For example, the CPU usage ratio for two servers at a period of time.

All the preprocessing operations will be done on each independent time series(i.e on both feature dimension and id dimension), while feature scaling will be only carried out on the feature dimension.

4.2 Create a TSDataset¶

Currently TSDataset only supports initializing from a pandas dataframe through TSDataset.from_pandas. A typical valid time series dataframe df is shown below.

You can initialize a TSDataset by simply:

# Server id Datetime CPU usage Mem usage

# 0 08:39 2021/7/9 93 24

# 0 08:40 2021/7/9 91 24

# 0 08:41 2021/7/9 93 25

# 0 ... ... ...

# 1 08:39 2021/7/9 73 79

# 1 08:40 2021/7/9 72 80

# 1 08:41 2021/7/9 79 80

# 1 ... ... ...

tsdata = TSDataset.from_pandas(df,

dt_col="Datetime",

id_col="Server id",

target_col=["CPU usage",

"Mem usage"])

target_col is a list of all elements along feature dimension, while id_col is the identifier that distinguishes the id dimension. dt_col is the datetime column. For extra_feature_col(not shown in this case), you should list those features that you are not interested for your task (e.g. you will not perform forecasting or anomaly detection task on this col).

If you are building a prototype for your forecasting/anomaly detection task and you need to split you dataset to train/valid/test set, you can use with_split parameter.TSDataset supports split with ratio by val_ratio and test_ratio.

4.3 Time series dataset preprocessing¶

TSDataset now supports impute, deduplicate and resample. You may fill the missing point by impute in different modes. You may remove the records that are totally the same by deduplicate. You may change the sample frequency by resample. A typical cascade call for preprocessing is:

tsdata.deduplicate().resample(interval="2s").impute()

4.4 Feature scaling¶

Scaling all features to one distribution is important, especially when we want to train a machine learning/deep learning system. TSDataset supports all the scalers in sklearn through scale and unscale method. Since a scaler should not fit on the validation and test set, a typical call for scaling operations is:

from sklearn.preprocessing import StandardScaler

scale = StandardScaler()

# scale

for tsdata in [tsdata_train, tsdata_valid, tsdata_test]:

tsdata.scale(scaler, fit=tsdata is tsdata_train)

# unscale

for tsdata in [tsdata_train, tsdata_valid, tsdata_test]:

tsdata.unscale()

unscale_numpy is specially designed for forecasters. Users may unscale the output of a forecaster by this operation. A typical call is:

x, y = tsdata_test.scale(scaler)\

.roll(lookback=..., horizon=...)\

.to_numpy()

yhat = forecaster.predict(x)

unscaled_yhat = tsdata_test.unscale_numpy(yhat)

unscaled_y = tsdata_test.unscale_numpy(y)

# calculate metric by unscaled_yhat and unscaled_y

4.5 Feature generation¶

Other than historical target data and other extra feature provided by users, some additional features can be generated automatically by TSDataset. gen_dt_feature helps users to generate 10 datetime related features(e.g. MONTH, WEEKDAY, …). gen_global_feature and gen_rolling_feature are powered by tsfresh to generate aggregated features (e.g. min, max, …) for each time series or rolling windows respectively.

4.6 Sampling and exporting¶

A time series dataset needs to be sampling and exporting as numpy ndarray/dataloader to be used in machine learning and deep learning models(e.g. forecasters, anomaly detectors, auto models, etc.).

Warning

You don’t need to call any sampling or exporting methods introduced in this section when using AutoTSEstimator.

4.6.1 Roll sampling¶

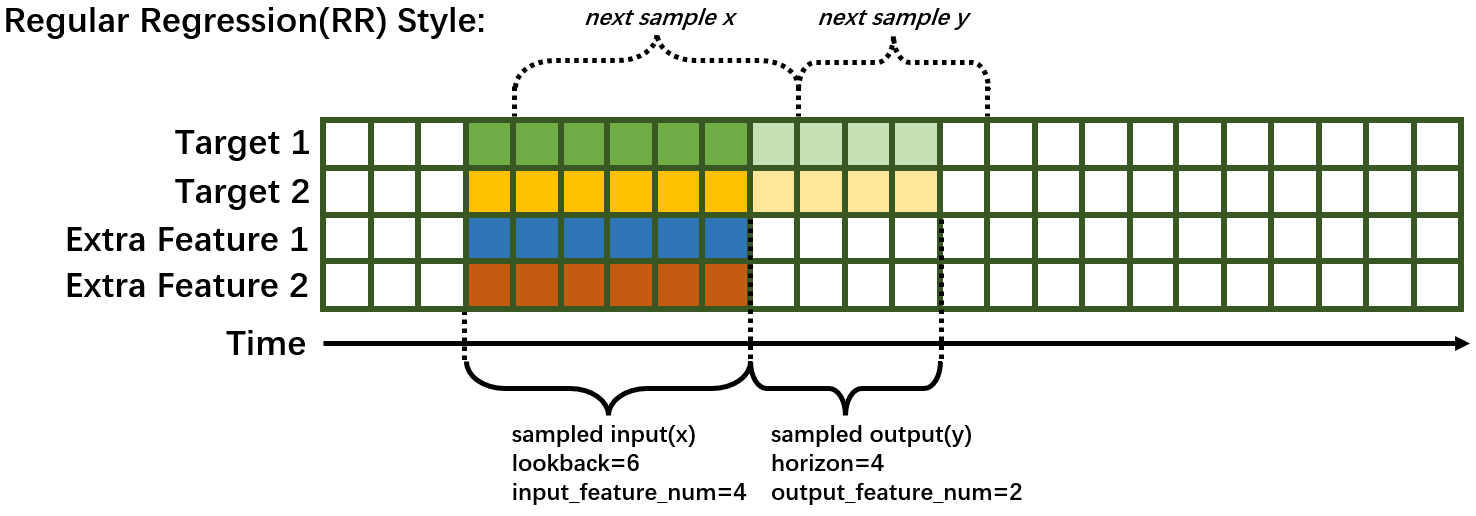

Roll sampling (or sliding window sampling) is useful when you want to train a RR type supervised deep learning forecasting model. It works as the diagram shows. Please refer to the API doc roll for detailed behavior. Users can simply export the sampling result as numpy ndarray by to_numpy or pytorch dataloader to_torch_data_loader.

Note

Difference between `roll` and `to_torch_data_loader`:

.roll(…) performs the rolling before RR forecasters/auto models training while .to_torch_data_loader(roll=True, …) performs rolling during the training.

It is fine to use either of them when you have a relatively small dataset (less than 1G). .to_torch_data_loader(roll=True, …) is recommended when you have a large dataset (larger than 1G) to save memory usage.

Note

Roll sampling format:

As decribed in RR style forecasting concept, the sampling result will have the following shape requirement.

Please follow the same shape if you use customized data creator.

A typical call of roll is as following:

# forecaster

x, y = tsdata.roll(lookback=..., horizon=...).to_numpy()

forecaster.fit((x, y))

4.6.2 Pandas Exporting¶

Now we support pandas dataframe exporting through to_pandas() for users to carry out their own transformation. Here is an example of using only one time series for anomaly detection.

# anomaly detector on "target" col

x = tsdata.to_pandas()["target"].to_numpy()

anomaly_detector.fit(x)

View TSDataset API Doc for more details.

5 Forecasting¶

Chronos provides both deep learning/machine learning models and traditional statistical models for forecasting.

There’re three ways to do forecasting:

Use highly integrated AutoTS pipeline with auto feature generation, data pre/post-processing, hyperparameter optimization.

Use auto forecasting models with auto hyperparameter optimization.

| Model | Style | Multi-Variate | Multi-Step | Distributed* | Auto Models | AutoTS | Backend |

|---|---|---|---|---|---|---|---|

| LSTM | RR | ✅ | ❌ | ✅ | ✅ | ✅ | pytorch |

| Seq2Seq | RR | ✅ | ✅ | ✅ | ✅ | ✅ | pytorch |

| TCN | RR | ✅ | ✅ | ✅ | ✅ | ✅ | pytorch |

| MTNet | RR | ✅ | ❌ | ✅ | ❌ | ✳️*** | tensorflow |

| TCMF | TS | ✅ | ✅ | ✳️** | ❌ | ❌ | pytorch |

| Prophet | TS | ❌ | ✅ | ❌ | ✅ | ❌ | prophet |

| ARIMA | TS | ❌ | ✅ | ❌ | ✅ | ❌ | pmdarima |

* Distributed training/inferencing is only supported by standalone forecasters.

** TCMF only partially supports distributed training.

*** Auto tuning of MTNet is only supported in our deprecated AutoTS API.

5.1 Time Series Forecasting Concepts¶

Time series forecasting is one of the most popular tasks on time series data. In short, forecasing aims at predicting the future by using the knowledge you can learn from the history.

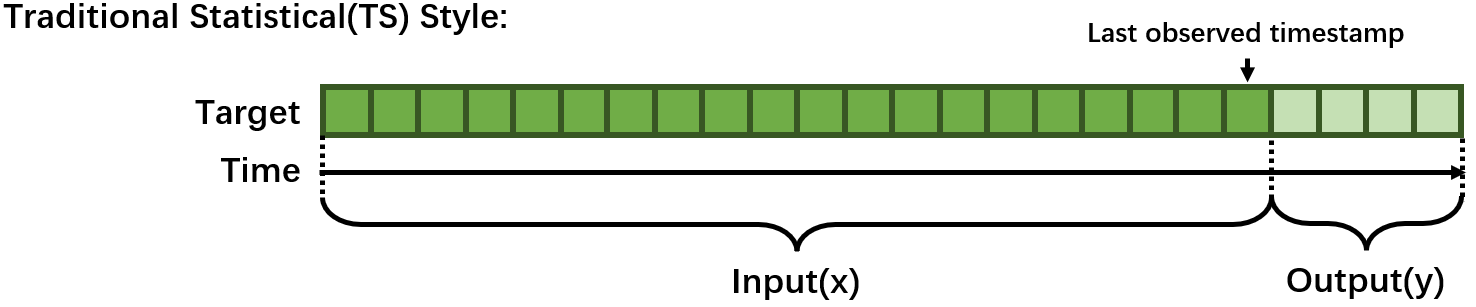

5.1.1 Traditional Statistical(TS) Style¶

Traditionally, Time series forecasting problem was formulated with rich mathematical fundamentals and statistical models. Typically, one model can only handle one time series and fit on the whole time series before the last observed timestamp and predict the next few steps. Training(fit) is needed every time you change the last observed timestamp.

5.1.2 Regular Regression(RR) Style¶

Recent years, common deep learning architectures (e.g. RNN, CNN, Transformer, etc.) are being successfully applied to forecasting problem. Forecasting is transformed to a supervised learning regression problem in this style. A model can predict several time series. Typically, a sampling process based on sliding-window is needed, some terminology is explained as following:

lookback/past_seq_len: the length of historical data along time. This number is tunable.horizon/future_seq_len: the length of predicted data along time. This number is depended on the task definition. If this value larger than 1, then the forecasting task is Multi-Step.input_feature_num: The number of variables the model can observe. This number is tunable since we can select a subset of extra feature to use.output_feature_num: The number of variables the model to predict. This number is depended on the task definition. If this value larger than 1, then the forecasting task is Multi-Variate.

5.2 Use AutoTS Pipeline¶

For AutoTS Pipeline, we will leverage AutoTSEstimator, TSPipeline and preferably TSDataset. A typical usage of AutoTS pipeline basically contains 3 steps.

Prepare a

TSDatasetor customized data creator.Init a

AutoTSEstimatorand call.fit()on the data.Use the returned

TSPipelinefor further development.

Warning

AutoTSTrainer workflow has been deprecated, no feature updates or performance improvement will be carried out. Users of AutoTSTrainer may refer to Chronos API doc.

Note

AutoTSEstimator currently only support pytorch backend.

View Quick Start for a more detailed example.

5.2.1 Prepare dataset¶

AutoTSEstimator support 2 types of data input.

You can easily prepare your data in TSDataset (recommended). You may refer to here for the detailed information to prepare your TSDataset with proper data processing and feature generation. Here is a typical TSDataset preparation.

from zoo.chronos.data import TSDataset

from sklearn.preprocessing import StandardScaler

tsdata_train, tsdata_val, tsdata_test\

= TSDataset.from_pandas(df, dt_col="timestamp", target_col="value", with_split=True, val_ratio=0.1, test_ratio=0.1)

standard_scaler = StandardScaler()

for tsdata in [tsdata_train, tsdata_val, tsdata_test]:

tsdata.gen_dt_feature()\

.impute(mode="last")\

.scale(standard_scaler, fit=(tsdata is tsdata_train))

You can also create your own data creator. The data creator takes a dictionary config and returns a pytorch dataloader. Users may define their own customized key and add them to the search space. “batch_size” is the only fixed key.

from torch.utils.data import DataLoader

def training_data_creator(config):

return Dataloader(..., batch_size=config['batch_size'])

5.2.2 Create an AutoTSEstimator¶

AutoTSEstimator depends on the Distributed Hyper-parameter Tuning supported by Project Orca. It also provides time series only functionalities and optimization. Here is a typical initialization process.

import zoo.orca.automl.hp as hp

from zoo.chronos.autots import AutoTSEstimator

auto_estimator = AutoTSEstimator(model='lstm',

search_space='normal',

past_seq_len=hp.randint(1, 10),

future_seq_len=1,

selected_features="auto")

We prebuild three defualt search space for each build-in model, which you can use the by setting search_space to “minimal”,”normal”, or “large” or define your own search space in a dictionary. The larger the search space, the better accuracy you will get and the more time will be cost.

past_seq_len can be set as a hp sample function, the proper range is highly related to your data. A range between 0.5 cycle and 3 cycle is reasonable.

selected_features is set to “auto” by default, where the AutoTSEstimator will find the best subset of extra features to help the forecasting task.

5.2.3 Fit on AutoTSEstimator¶

Fitting on AutoTSEstimator is fairly easy. A TSPipeline will be returned once fitting is completed.

ts_pipeline = auto_estimator.fit(data=tsdata_train,

validation_data=tsdata_val,

batch_size=hp.randint(32, 64),

epochs=5)

Detailed information and settings please refer to AutoTSEstimator API doc.

5.2.4 Development on TSPipeline¶

You may carry out predict, evaluate, incremental training or save/load for further development.

# predict with the best trial

y_pred = ts_pipeline.predict(tsdata_test)

# evaluate the result pipeline

mse, smape = ts_pipeline.evaluate(tsdata_test, metrics=["mse", "smape"])

print("Evaluate: the mean square error is", mse)

print("Evaluate: the smape value is", smape)

# save the pipeline

my_ppl_file_path = "/tmp/saved_pipeline"

ts_pipeline.save(my_ppl_file_path)

# restore the pipeline for further deployment

from zoo.chronos.autots import TSPipeline

loaded_ppl = TSPipeline.load(my_ppl_file_path)

Detailed information please refer to TSPipeline API doc.

Note

init_orca_context is not needed if you just use the trained TSPipeline for inference, evaluation or incremental fitting.

Note

Incremental fitting on TSPipeline just update the model weights the standard way, which does not involve AutoML.

5.3 Use Standalone Forecaster Pipeline¶

Chronos provides a set of standalone time series forecasters without AutoML support, including deep learning models as well as traditional statistical models.

View some examples notebooks for Network Traffic Prediction

The common process of using a Forecaster looks like below.

# set fixed hyperparameters, loss, metric...

f = Forecaster(...)

# input data, batch size, epoch...

f.fit(...)

# input test data x, batch size...

f.predict(...)

The input data can be easily get from TSDataset.

View Quick Start for a more detailed example. Refer to API docs of each Forecaster for detailed usage instructions and examples.

5.3.1 LSTMForecaster¶

LSTMForecaster wraps a vanilla LSTM model, and is suitable for univariate time series forecasting.

View Network Traffic Prediction notebook and LSTMForecaster API Doc for more details.

5.3.2 Seq2SeqForecaster¶

Seq2SeqForecaster wraps a sequence to sequence model based on LSTM, and is suitable for multivariant & multistep time series forecasting.

View Seq2SeqForecaster API Doc for more details.

5.3.3 TCNForecaster¶

Temporal Convolutional Networks (TCN) is a neural network that use convolutional architecture rather than recurrent networks. It supports multi-step and multi-variant cases. Causal Convolutions enables large scale parallel computing which makes TCN has less inference time than RNN based model such as LSTM.

View Network Traffic multivariate multistep Prediction notebook and TCNForecaster API Doc for more details.

5.3.4 MTNetForecaster¶

MTNetForecaster wraps a MTNet model. The model architecture mostly follows the MTNet paper with slight modifications, and is suitable for multivariate time series forecasting.

View Network Traffic Prediction notebook and MTNetForecaster API Doc for more details.

5.3.5 TCMFForecaster¶

TCMFForecaster wraps a model architecture that follows implementation of the paper DeepGLO paper with slight modifications. It is especially suitable for extremely high dimensional (up-to millions) multivariate time series forecasting.

View High-dimensional Electricity Data Forecasting example and TCMFForecaster API Doc for more details.

5.3.6 ARIMAForecaster¶

ARIMAForecaster wraps a ARIMA model and is suitable for univariate time series forecasting. It works best with data that show evidence of non-stationarity in the sense of mean (and an initial differencing step (corresponding to the “I, integrated” part of the model) can be applied one or more times to eliminate the non-stationarity of the mean function.

View ARIMAForecaster API Doc for more details.

5.3.7 ProphetForecaster¶

ProphetForecaster wraps the Prophet model (site) which is an additive model where non-linear trends are fit with yearly, weekly, and daily seasonality, plus holiday effects and is suitable for univariate time series forecasting. It works best with time series that have strong seasonal effects and several seasons of historical data and is robust to missing data and shifts in the trend, and typically handles outliers well.

View Stock Prediction notebook and ProphetForecaster API Doc for more details.

5.4 Use Auto forecasting model¶

Auto forecasting models are designed to be used exactly the same as Forecasters. The only difference is that you can set hp search function to the hyperparameters and the .fit() method will search the best hyperparameter setting.

# set hyperparameters in hp search function, loss, metric...

f = Forecaster(...)

# input data, batch size, epoch...

f.fit(...)

# input test data x, batch size...

f.predict(...)

The input data can be easily get from TSDataset. Users can refer to detailed API doc.

6 Anomaly Detection¶

Anomaly Detection detects abnormal samples in a given time series. Chronos provides a set of unsupervised anomaly detectors.

View some examples notebooks for Datacenter AIOps.

6.1 ThresholdDetector¶

ThresholdDetector detects anomaly based on threshold. It can be used to detect anomaly on a given time series (notebook), or used together with Forecasters to detect anomaly on new coming samples (notebook).

View ThresholdDetector API Doc for more details.

6.2 AEDetector¶

AEDetector detects anomaly based on the reconstruction error of an autoencoder network.

View anomaly detection notebook and AEDetector API Doc for more details.

6.3 DBScanDetector¶

DBScanDetector uses DBSCAN clustering algortihm for anomaly detection.

View anomaly detection notebook and DBScanDetector API Doc for more details.

7 Generate Synthetic Data¶

Chronos provides simulators to generate synthetic time series data for users who want to conquer limited data access in a deep learning/machine learning project or only want to generate some synthetic data to play with.

Note

DPGANSimulator is the only simulator chronos provides at the moment, more simulators are on their way.

7.1 DPGANSimulator¶

DPGANSimulator adopt DoppelGANger raised in Using GANs for Sharing Networked Time Series Data: Challenges, Initial Promise, and Open Questions. The method is data-driven unsupervised method based on deep learning model with GAN (Generative Adversarial Networks) structure. The model features a pair of seperate attribute generator and feature generator and their corresponding discriminators DPGANSimulator also supports a rich and comprehensive input data (training data) format and outperform other algorithms in many evalution metrics.

Users may refer to detailed API doc.

8 Useful Functionalities¶

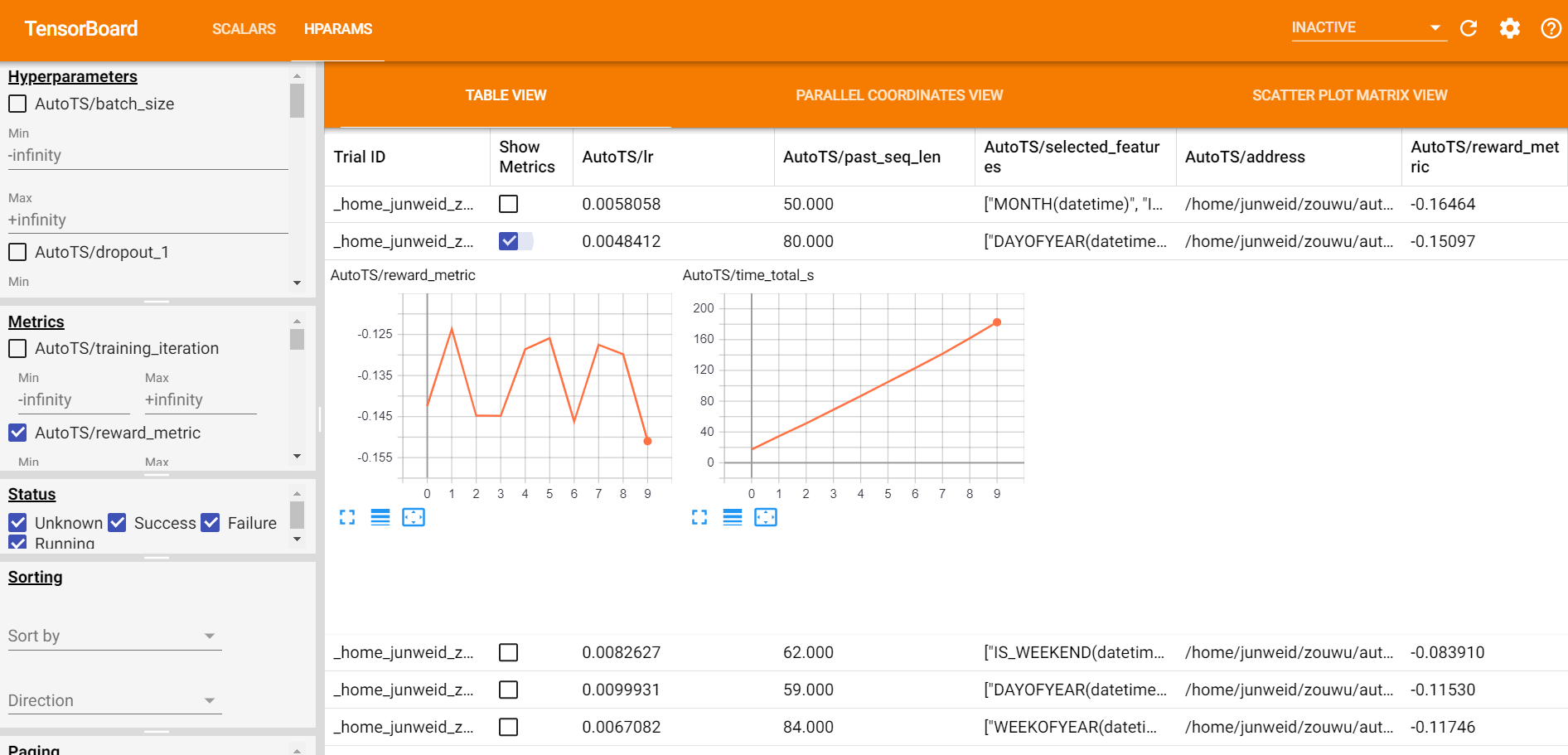

8.1 AutoML Visualization¶

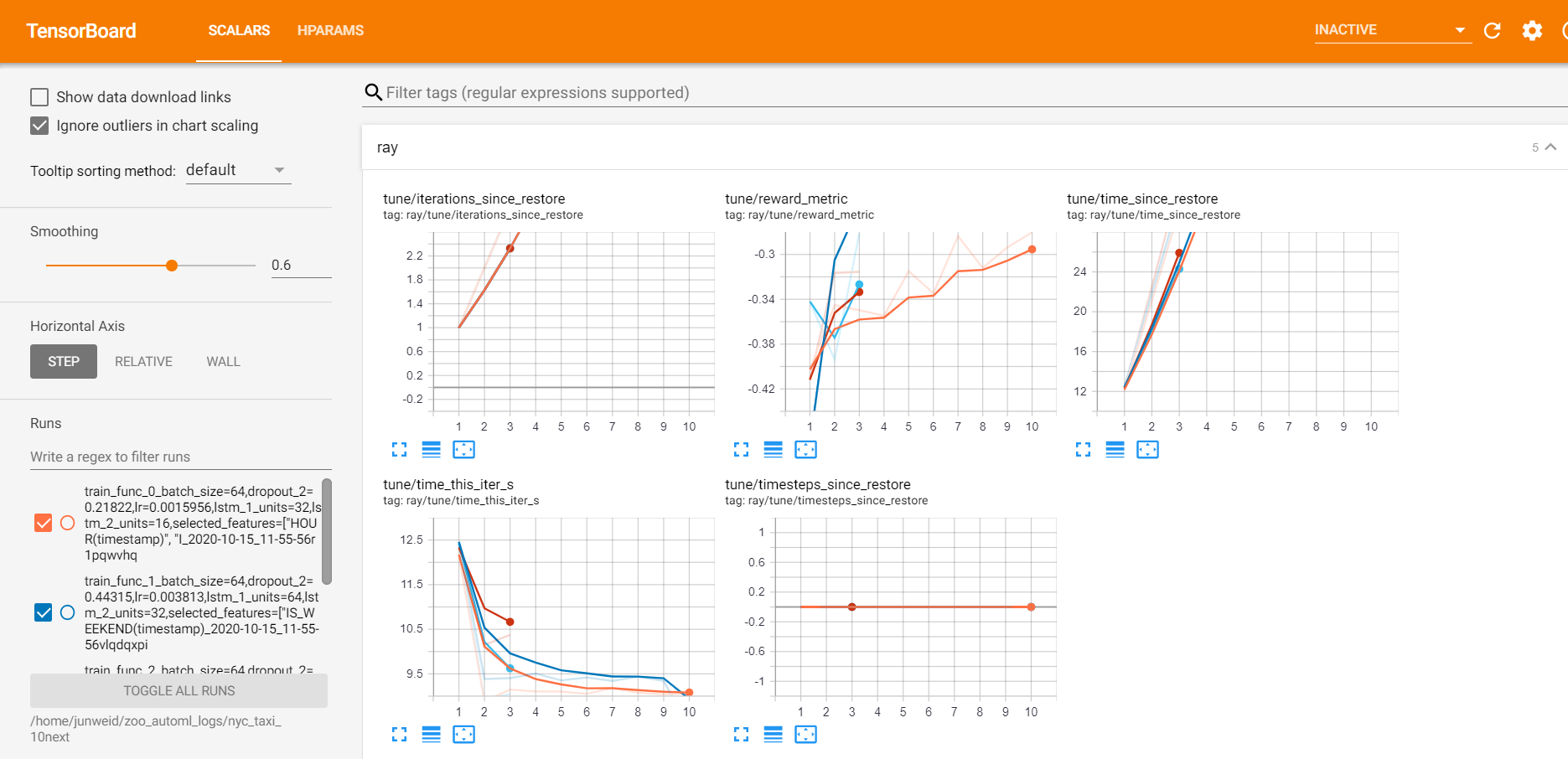

AutoML visualization provides two kinds of visualization. You may use them while fitting on auto models or AutoTS pipeline.

During the searching process, the visualizations of each trail are shown and updated every 30 seconds. (Monitor view)

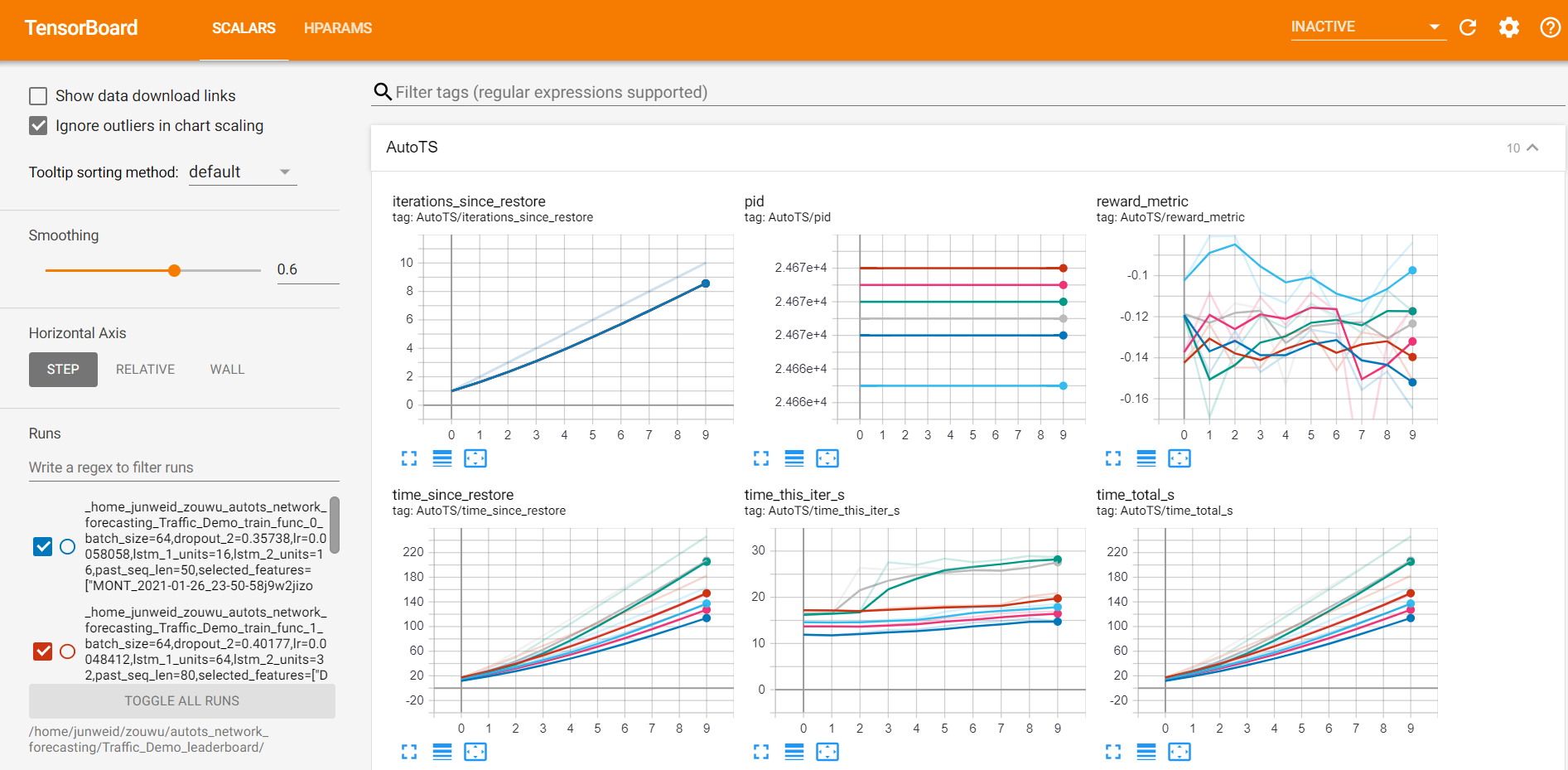

After the searching process, a leaderboard of each trail’s configs and metrics is shown. (Leaderboard view)

Note: AutoML visualization is based on tensorboard and tensorboardx. They should be installed properly before the training starts.

Monitor view

Before training, start the tensorboard server through

tensorboard --logdir=<logs_dir>/<name>

logs_dir is the log directory you set for your predictor(e.g. AutoTSEstimator, AutoTCN, etc.). name is the name parameter you set for your predictor.

The data in SCALARS tag will be updated every 30 seconds for users to see the training progress.

After training, start the tensorboard server through

tensorboard --logdir=<logs_dir>/<name>_leaderboard/

where logs_dir and name are the same as stated in Monitor view.

A dashboard of each trail’s configs and metrics is shown in the SCALARS tag.

A leaderboard of each trail’s configs and metrics is shown in the HPARAMS tag.

Use visualization in Jupyter Notebook

You can enable a tensorboard view in jupyter notebook by the following code.

%load_ext tensorboard

# for scalar view

%tensorboard --logdir <logs_dir>/<name>/

# for leaderboard view

%tensorboard --logdir <logs_dir>/<name>_leaderboard/

8.2 ONNX/ONNX Runtime support¶

Users may export their trained(w/wo auto tuning) model to ONNX file and deploy it on other service. Chronos also provides an internal onnxruntime inference support for those users who pursue low latency and higher throughput during inference on a single node.

LSTM, TCN and Seq2seq has supported onnx in their forecasters, auto models and AutoTS. When users use these built-in models, they may call predict_with_onnx/evaluate_with_onnx for prediction or evaluation. They may also call export_onnx_file to export the onnx model file and build_onnx to change the onnxruntime’s setting(not necessary).

f = Forecaster(...)

f.fit(...)

f.predict_with_onnx(...)

8.3 Distributed training¶

LSTM, TCN and Seq2seq users can easily train their forecasters in a distributed fashion to handle extra large dataset and utilize a cluster. The functionality is powered by Project Orca.

f = Forecaster(..., distributed=True)

f.fit(...)

f.predict(...)

f.to_local() # collect the forecaster to single node

f.predict_with_onnx(...) # onnxruntime only supports single node

8.4 XShardsTSDataset¶

Warning

XShardsTSDataset is still experimental.

TSDataset is a single thread lib with reasonable speed on large datasets(~10G). When you handle an extra large dataset or limited memory on a single node, XShardsTSDataset can be involved to handle the exact same functionality and usage as TSDataset in a distributed fashion.

# a fully distributed forecaster pipeline

from orca.data.pandas import read_csv

from zoo.chronos.data.experimental import XShardsTSDataset

shards = read_csv("hdfs://...")

tsdata, _, test_tsdata = XShardsTSDataset.from_xshards(...)

tsdata_xshards = tsdata.roll(...).to_xshards()

test_tsdata_xshards = test_tsdata.roll(...).to_xshards()

f = Forecaster(..., distributed=True)

f.fit(tsdata_xshards, ...)

f.predict(test_tsdata_xshards, ...)